Towards Environment Aware Social Robots using Visual Dialog

November 2019 | ML, HRI

Problem

Present day social robots are mostly unaware of their surroundings and are not able to model conversations taking into consideration the environment variables and the previous conversation history into account.

Goal

To develop a visual dialog module at the intersection of computer vision and NLP that can model responses according to the changing environment and the previous conversation history. Module integrated into the humanoid robot Nadine.

Methodology

Literature Review

Competitive Analysis

Role

Machine Learning

Computer Vision

Natural Language Processing

Advisor

Dr. Manoj Ramanathan

Prof. Nadia Thalmann

State of the art social robots are limited in their ability to understand their environment and have a meaningful conversation based on it. Visual Dialog is a research field that combines computer vision and natural language processing techniques to achieve visual awareness. In this paper, we employ a Visual Dialog system in a humanoid social robot, Nadine, to improve its social interaction and reasoning capabilities. The Visual Dialog module consists of a neural encoder-decoder model, namely a memory network encoder and a discriminative decoder. The ability to carry out audio-visual scene-aware dialog with a user not only augments the human-robot interaction, but also lets the user to think of the robot of more than just a mere entity piece. Moreover, we also discuss the various applications and the psychological impacts this can have in the formation of human-robot bonds.

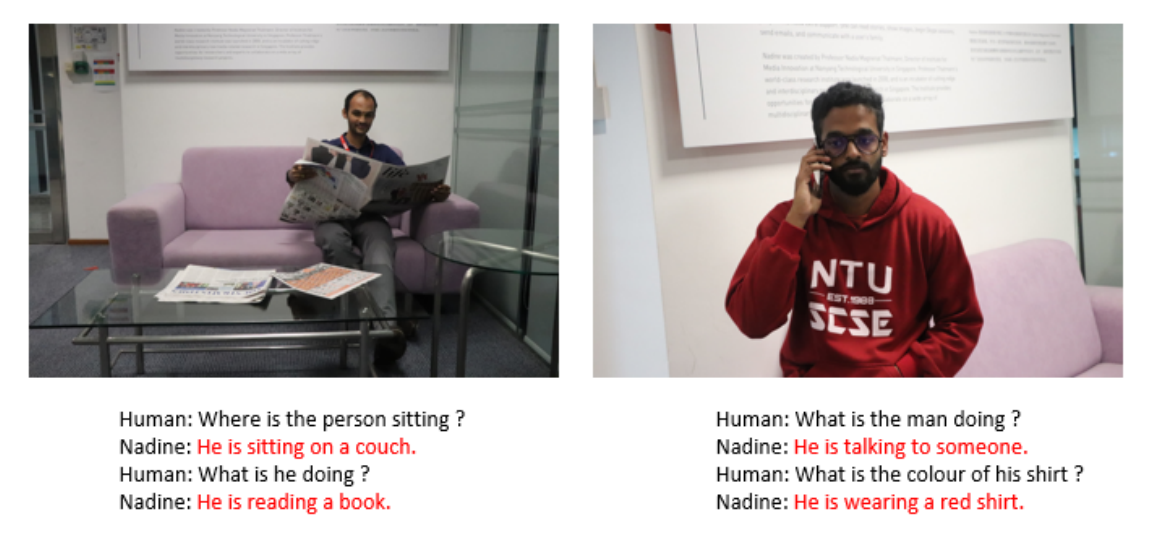

Below is a conversation sample between the robot and the user about the current scene:

–> Link to the detailed Report.